By CLAIRE DUFOURD

As AI tools such as Otter.ai and Grammarly become more prominent in newsrooms across Canada, transparency is quickly becoming a source of concern for audiences and reporters alike. And too often, newsrooms choose to default to vague AI disclosures, without a way to know exactly which tools are being used.

That uncertainty is what prompted Angela Misri, a journalism professor at Toronto Metropolitan University, to include a boilerplate AI disclosure on stories published by her student-led newsroom On The Record (OTR). The message, which appeared at the bottom of every story on the website, read “This article may have been created with the use of AI software such as Google Docs, Grammarly, and/or Otter.ai for transcription.”

“As I started to see AI tools become more prevalent, I realized that I at least wanted to disclose to the audience that it was something we were exploring,” Misri says. “Because it felt like if I didn’t know exactly what was being used, I should at least try and be transparent for the audience of OTR.”

This disclosure was meant to signal honesty and transparency to her students’ audience; at the time, Misri didn’t exactly know which tools students were using, only that AI was quickly becoming part of their workflow, whether or not it was apparent to her or sanctioned by the university.

The next semester, this short-term solution has evolved into something far more concrete, and a project that could be implemented in local newsrooms navigating similar issues of evolving alongside generative AI.

“I wanted to measure students’ mindfulness when using AI tools,” Misri says. “Adding something more specific than a boilerplate disclosure helped to discover how they were using [them] for things like headline generation, which I hadn’t used it for before.”

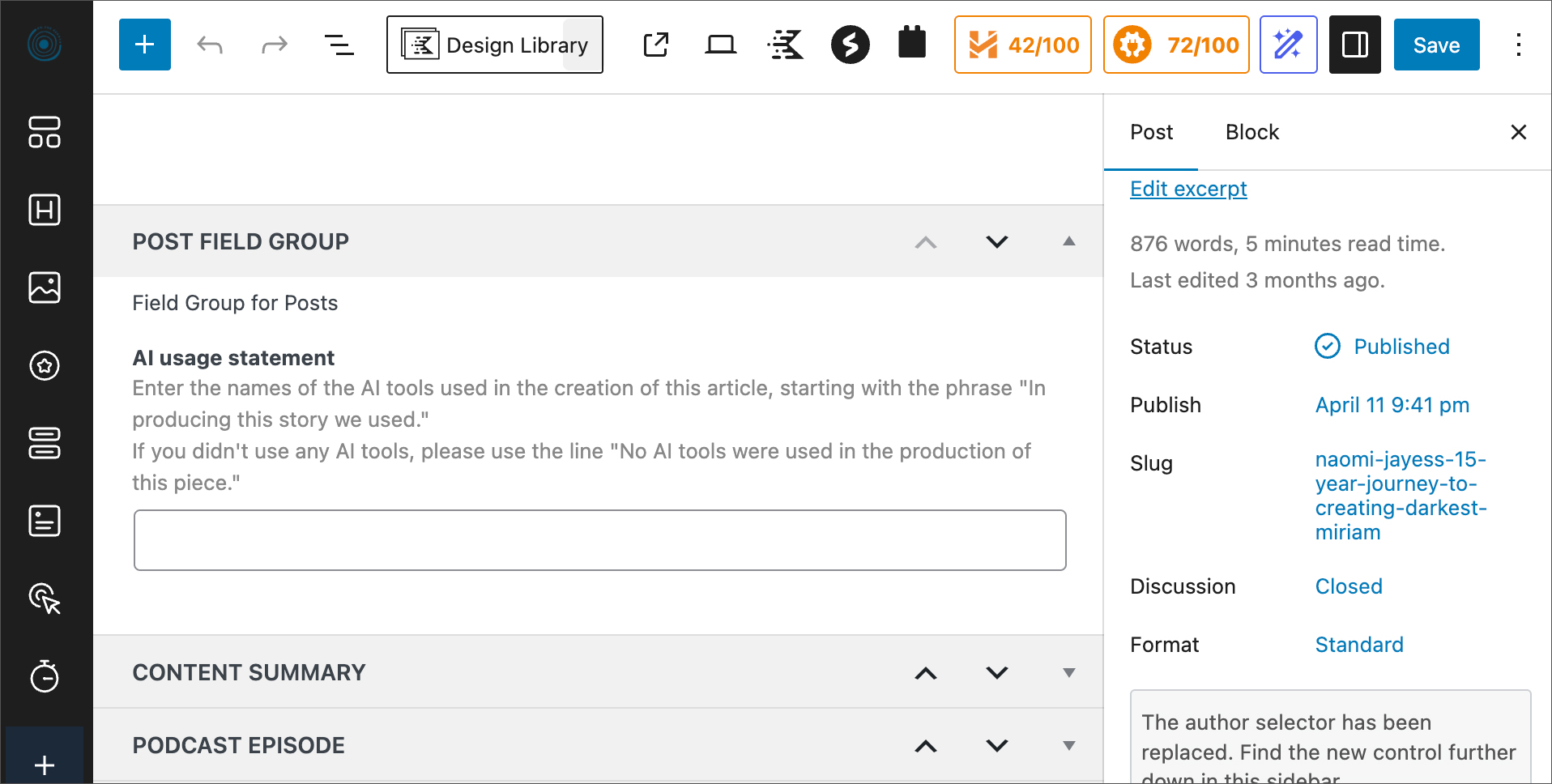

Misri launched a field pilot from the back-end of OTR’s publishing system, WordPress. Instead of a boilerplate AI disclosure, students who have taken the course since September 2025 are now prompted to enter exactly which AI tools were used in the making of their article.

Rather than guessing at the use of AI in newsrooms, this disclosure now captures concrete data, establishing patterns that can then be applied to non-student-led newsrooms. These findings can determine which AI tools can be used in the writing process, and for what purpose.

The process and the findings could then be used in smaller newsrooms across Canada to examine how structured disclosures can support transparency on a local scale.

“I think it could be very interesting for small local newsrooms that want to jump on the AI ‘hype,’” Misri says. “It allows for the openness to talk about AI but also to talk to your audience directly about how you’re using AI tools.”

This field pilot offers an opportunity to analyze how small newsrooms with few resources can evolve from often obsolete ethics statements towards more practical systems tailored to the rise of AI in journalism.

The disclosure field does not aim to force reporters to shy away from the use of AI, but is rather a prompt added for journalists to reflect on their use of AI. This addition, which Misri hopes to implement on a larger scale, encourages both editors and reporters to think critically about their tools while maintaining transparency with their audience.

“If there are elements of your journalistic work that are hard and that you don’t enjoy where you could get some help from an AI tool, I think that’s great,” Misri says. “I would like to see more transparency from our local newsrooms, and I think this project could be a shared experimental area where we could all learn together.”

What began as an educational choice for Misri can become a model for local newsrooms across the country, one that acknowledges that AI has already found its place in journalism, whether newsrooms intended for it to happen or not.

For those newsrooms operating with a small staff and tight budgets, this approach offers a simple, adaptive model that can be designed, tested and improved over time.

Students who have lived through this experimental disclosure seem to share Misri’s opinion on the topic.

“Honestly, this made me feel reassured that if I were to have the assistance of AI, I wouldn’t be questioned in my integrity,” says Amulyaa Dwivedi, a former OTR student. “It kind of worked like a tracker that allows me to keep myself in check with my AI usage.”

Dwivedi adds that implementing this field pilot in local newsrooms could benefit the reporting process

‘I think if newsrooms were to implement this, it would not only help them be more transparent to their audience but also allow journalists to work more efficiently with the help of AI tools,” she said.